- Offer Profile

- The focus of the Human-Automation Systems (HumAnS) Lab is centered around the concept of humanized intelligence, the process of embedding human cognitive capability into the control path of autonomous systems. Specifically, we study how human-inspired techniques, such as soft computing methodologies, sensing, and knowledge representation, can be used to enhance the autonomous capabilities of intelligent systems. The lab's efforts address issues of autonomous control as well as aspects of interaction with humans and the surrounding environment. In our research efforts, we draw on the disciplines of robotics, cognitive sensing, machine learning, computational intelligence, and human-robot interaction.

Projects

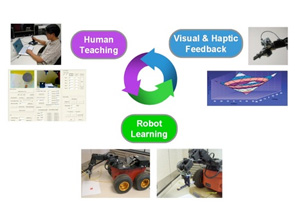

- The focus of the lab is on control of autonomous systems that operate in the real-world. Thus, real-time capability is a necessary component of any technology used for control. To accomplish this goal, we develop techniques that allow real-time decision-making based on perceptions of the environment. Coupled with perception, autonomous reasoning allows a system to reason about actions based on its knowledge of the environment and information characterizing its current state within the environment. To incorporate reasoning for autonomous control, our focus is to embed human capabilities directly into the system, with a focus on developing a robotic control system having human-equivalent performance even after the interactions are complete.

Human-System Interaction

- One of the key issues in human-automated system interaction scenarios is deciding what decisions and tasks are best done with humans, or automated systems, or a combination of each. In general, these two entities possess complimentary skill sets. Humans perform extremely well dealing with unforeseen events or new tasks. On the other hand, autonomous systems excel in performing routine work tasks, and in well-defined problem areas. In the area of human-system interaction, our research focuses on using basic scientific and engineering methodologies necessary for improving human-agent system performance that results in a synergistic method for decision-making and task coordination in real-world scenarios.

Assistive Robotics - Learning from Teleoperation

- Robots are rolling out of the research labs and

walking, crawling and flying into our homes. As such the new description for

a robot owner is changing. For example from the researcher or the science

enthusiast, to the home make who needs a little help with the windows. To

ease the challenge of programming robots for the new robot owner/operator

learning from demonstration has been proposed. This form of features the

operator demonstrating what they would like the robot do and the robot

learning how to make it happen.

One of the challenges in the robotic learning portion of this process is that many robot's do not yet have the ability to actively engage in the process. It is often the case that robots passively learn based on information provided to them. They are not equipped to perform meta-analysis about their experience, or at least to do so in a short enough time for it to be relevant in this learning process.

This research seeks to uncover quantitative metrics which can be generated by these robotic students. Such metrics can enable the robot to perform tasks like determining how much more training time it needs to master a task, assessing whether a particular set of instruction is likely to be helpful in the learning process, or even to determining if the provided instruction is coming from someone who knows what they are doing.

Paradigm

Omni + Pioneer

Omni + Simulation

Assistive Robotics - Using Robots to Aid with Physical Therapy

- Mechatronic and robotic systems for

neurorehabilitation can be generally used to record information about the

motor performance (position, trajectory, interaction force/impedance) during

active movements. Being able to objectively assess the performance of a

patient through repeatable and quantifiable metrics has shown to be an

effective means for rehabilitation therapy. However, to date, we are unaware

of any research regarding child upper limb rehabilitation techniques using

robotic systems; rather, the majority of these systems are only being

applied to stroke patients.

Logically, children are naturally engaged by toys, especially those that are animate. However, while there are a number of robotic toys that have been shown to be engaging to children, many of the studies focus solely on children with autism. The goal of this project is to fuse play and rehabilitation techniques using a robotic design to induce child-robot interaction that will be entertaining as well as effective for the child.

Using a mimicking approach in order to engage the child in a gaming scenario, the humanoid demonstrates an action and asks the child to perform the same action. While the child is performing the action, the humanoid is recording and processing the child's movements as input data in order to determine if the movements indeed match that its own. Utilizing image processing techniques such as Motion-History Imaging (MHI) and Maximally Stable Extremal Regions (MSER) to simplify the image and recognize the type of motion respectively, coupled with Dynamic Time Warping (DTW) for sequence matching and other physical therapeutic metrics, the humanoid is able to notify the child whether or not he or she has performed the movement correctly.

Education and Robotics

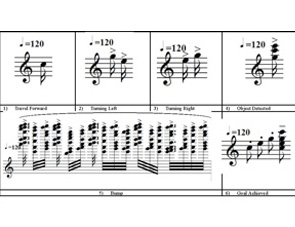

Accessible Robotic Programming for Students with Disabilities (ARoPability)

- Robotics-based activities has been shown to encourage non-traditional students to consider careers in computing and has even been adopted as part of core computer-science curriculum at a number of Universities. Unfortunately, the concept of non-traditional student in this arena has not traditionally extended to encompass students with visual or physical impairments. As such, we seek to investigate the use of alternative interface modalities to engage students with disabilities in robotics-based programming activities. We seek to answer questions such as “What characteristics of robotics-based activities need to be transformed to engage students with visual impairments?” “What technologies can be adapted to enable achievement of robotics-based programming activities for students with physical impairments?” “Are there existing teaching modalities already employed by educators that can be used to train these new computing professionals?” and “What methods can be exploited to broaden participation in computing for students with visual or physical impairments?” This NSF effort targets middle and high school students in order to engage during the critical years and hosts a number of robotics camps in conjunction with the Center for the Visually Impaired, the National Federation of the Blind, and Children¹s Healthcare of Atlanta at Scottish Rite.

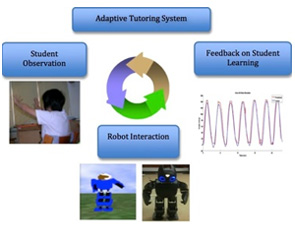

Robot Tutor-in-a-Box

- In recent years, there has been a verifiable increase in the use of virtual agents for tutoring ranging from the K-12 classroom to medical schools. Although results are varied, studies have shown evidence that the use of tutoring agents results in improvements in math education, reading, and even practicing surgical skills. Unfortunately, training individuals from diverse backgrounds requires customized training approaches that align with individual learning styles. Scaffolding is a well-established instructional approach that facilitates learning by incrementally removing and/or augmenting training aids as the learner progresses. By combining multiple training aids (i.e. multimodal interfaces), a trainer, either physical or virtual, must make real-time decisions about which aids to provide throughout the training scenario. Unfortunately, a significant problem occurs in implementing scaffolding techniques since the speed and selection of the training aids must be strongly correlated to the individual traits of a specific trainee. As such, in this work, we investigate methods for identifying the different learning styles of students and use this information to adapt the training sequence of a robot tutor. This involves investigating the use of multi-modal interfaces, such as associated with various forms of textual, graphical, and audible interaction, as well as socially-interactive robot behaviors to engage and build pathways to individualize learning.

Mars 2020

- Computer adventure games has grown in appeal to the younger generation, and yet, exposure to adventure games alone does not provide direct mechanisms to improve computer-science related skills. As such, we have developed a robotic adventure game that embeds high-level computer science concepts as part of the game scenario. The explicit purpose of this delivery mechanism is to introduce middle school students to fundamental concepts of programming. The underlying model is that by capitalizing on the popularity of computer games to teach basic computer science concepts to younger students, we can increase their desire to pursue a STEM-related career in the future. These robotics and computer science concepts are taught through a number of Saturday and summer middle-school camps held at Georgia Tech throughout the year.

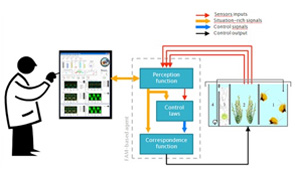

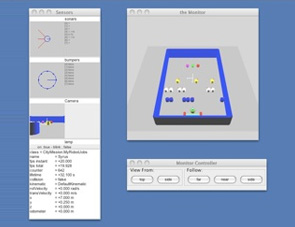

Space Flight Life Support Systems

- Current research focuses on the development of a

situation-based human-automation integration method for the assessment and

operation of heterogeneous dynamic systems (HDS), composed of humans,

physical systems, and computer agents, which behavior depends on their

situation. The main challenge consists in making use of computational

intelligence methods to develop numerical tools and criteria consistent with

control systems theory and principles in cognitive engineering to enable the

integration and safe operation of these systems. Fields of application

include physical and cyber security systems, smart grid operation,

bioengineering systems and life support, disaster monitoring and recovery,

epidemic monitoring and control, intelligent transportation systems,

financial and investment services, and tactical and operational battlefield

command and control systems. The purpose is to contribute to the

methodological development of situation-based and user-centered design

approaches for the integration of HDS.

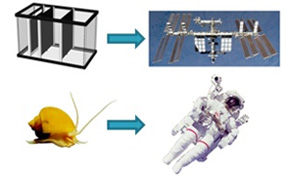

In this project, we make use of a small-scale aquatic habitat for experiments relevant to the integration, automation, and operation of bioregenerative life support systems (BLSS), which make use of biological processes to transform biological by-products back into consumables. These systems grow in importance with the development of long-duration human space exploration systems. The aquatic habitat is used as a working analogy to a space habitat as snails or other invertebrates (consumers) are to astronauts. BLSS combine physico-chemical and biological processes with the purpose of improving the autonomy of man-made habitats and the life quality of their living organisms. These processes require energy and time to transform wastes and by-products back into consumables. Consequently, their maintenance may impose considerable workload to human operators. In addition, the slow response of BLSS and their demand of human attention creates vulnerabilities that, unattended, may translate into human errors, performance deterioration, and failures. By properly combining sensor information, computational resources, and user-interfaces, this work develops a methodology to integrate humans and automation toward the proper and safe operation of BLSS, among other bioengineering systems, and HDS.

Human-System Interaction

- As explorers, humans are superior to robots due to their ability to think critically, their resilience in the face of unexpected situations, and their adaptation to new scenarios. On the other hand, it is unrealistic to send humans in the near term on remote planetary missions or to hazardous terrain environments here on Earth. Although robots have limited perception and reasoning, and their capabilities are limited by foresight and insight of their own developers, it is more feasible to advance robot technology to function as explorers than to send humans. In the HumAnS Lab, we focus on increasing the capability of robot vehicles to function in natural environments, such as found on planetary surfaces, undersea, underground, and in remote geological locations here on Earth.

SnoMotes

- Many important scientific studies, particularly those

involving climate change, require weather measurements from the ice sheets

in Greenland and Antarctica. Due to the harsh and dangerous conditions of

such environments, it would be advantageous to deploy a group of autonomous,

mobile weather sensors, rather than accepting the expense and risk of human

presence. To validate our methodologies for navigation in such environments,

a set of prototype arctic rovers have been designed, constructed, and fielded

on a glacier in Alaska.

The Human-Automation Systems (HumAnS) Lab has been developing reconfigurable robotic sensor networks, and robotic vehicles for use in the exploration of remote planetary surfaces, such as Mars, and remote sites on Earth, such as Antartica.

This particular set of projects has gone through various versions:- Version 1 (Walking Robot)

- Version 2 (Byrobot)

- Version 3 (SnoMotes I) and Version 4 (SnoMotes II)

- Field Trials in Alaska (with Version 3 and 4)

Version 1 (Walking Robot)

- Our first version was a simple walking robot created to test our robotic sensor networks. Therefore, the work done on future iterations could be focused on the hardware of the robots. In order to bring us to our future goal: creating an all-terrain, multi-directional exploratory vehicle.

Version 2 (Byrobot)

- The main idea behind the hardware component of this

research project is to design, build, and control a low-cost mobile robot

that can use both legs and wheels. Some of the legged-wheeled robots that

currently exist have their wheels attached to an actuator located at the end

of the robot leg. When the robot is commanded to walk, the wheel is

stationary and the robot actually walks on its wheel. This causes a number

of problems that hinders long-term and robust operation in remote

environments.

The ByroBot was designed with 6 legs and 4 wheels. Each leg has 3 servo motors to provide 3 degrees-of-freedom for instituting a walking pattern. Each wheel is attached to a DC motor for 4-wheel drive. The robot body material is polycarbonate plastic (which is nice and robust, and more lightweight than aluminum or some other metal). The robot is able to drive on its 4 wheels and roll over obstacles, as well as have the legs retract up (as you see in the CAD model and in the videos), so it can stand and walk in its legged configuration for navigating over larger obstacles. The Eyebot is the primary controller for this robot. It can be programmed in either C or Assembler language. An additional controller, the Servo Controller, is interfaced with the Eyebot to allow control of up to 32 servo channels. The Eyebot and the Servo Controller are able to communicate through their serial COM ports using a female-to-female null modem.

Why do we want these specifications?

The development of a new family of robotic vehicles for use in the exploration of remote planetary surfaces, such as Mars, and remote sites on Earth, such as Antartica, is an ongoing process. Current robotic vehicles must traverse rough terrain having various characteristics such as steep slopes, icy surfaces, and cluttered rock distributions, to name a few. The goal of the Byrobot project is to design a new robotic mobility system that performs to optimum capability in remote environments, which leads to the idea of the Legged-Wheeled robot.

Field mobile robots must traverse long distances on hazardous terrain safely and autonomously using uncertain and imprecise information. Research such as traversability analysis, deliberative path planning with pre-stored terrain maps and embedded reactive behavior have been used to address the problems of navigation in natural terrain, but the process of successfully navigating between two designated points in rough terrain with minimal human interaction is still an open issue. Legged robots, versus wheeled mobility platforms, offers many advantages due to their ability to traverse a wide variety of terrain, but the control of walking poses special challenges in natural environments. Even simple legged-robot platforms have a large degree of coupled interactions and no single walking gait is suitable for all terrain surfaces. Walking surfaces can vary in a number of factors including traction properties, hardness, frictional coefficients, and bearing strength. To successfully operate within varying terrain environments, an automatic gait adaptation method for field mobile robots is a desirable quality. The focus of our work is therefore on the development of a methodology that learns new walking gaits autonomously while operating in an uncharted environment, such as on the Mars planetary surface or in the remote Antarctica environment.

- The ByroBot was designed with 6 legs and 4 wheels. Each leg has 3 servo motors to provide 3 degrees-of-freedom for instituting a walking pattern. Each wheel is attached to a DC motor for 4-wheel drive. The robot body material is polycarbonate plastic (which is nice and robust, and more lightweight than aluminum or some other metal). The robot is able to drive on its 4 wheels and roll over obstacles, as well as have the legs retract up (as you see in the CAD model and in the videos), so it can stand and walk in its legged configuration for navigating over larger obstacles. The Eyebot is the primary controller for this robot. It can be programmed in either C or Assembler language. An additional controller, the Servo Controller, is interfaced with the Eyebot to allow control of up to 32 servo channels. The Eyebot and the Servo Controller are able to communicate through their serial COM ports using a female-to-female null modem.

- When ByroBot stands, it is primarily supported by the

high-torque servos that are at the "hip joint" of each leg. Joint torques

were calculated to determine the torque needed for theses servos so that

ByroBot could support itself standing. Kinematic analysis is done for the

wheels to find velocity and position data, as well as the radius of

curvature of the robot motion using different linear velocity of the wheel

pairs in its differential drive. Forward kinematic analysis was also done

for the legs to find their "foot" positions based on the servo motor angles,

which controls the joint positions. By locating the position of the feet

that are in contact with the ground, a polygon of support can be determined

for the robot while standing. Keeping the robot body center of mass inside

this polygon of support at all times while walking will allow for optimum

stablility of the Byrobot.

- Science exploration in unknown and uncharted terrain involves operating in an unstructured and poorly modeled environment. Several designs are plausible for operating in these types of environments. In order to guarantee success of robotic missions for the future, technologies that can enable multi-rover collaboration and human-robot interaction must be matured. The main hurdle with this focus is the cost and system complexity associated with deploying multiple robotic vehicles having the capability to survive long periods of time, as well as possessing multi-tasking capability. To address this issue, this research focuses on modularizing both hardware and software components to create a reconfigurable robotic explorer. The new Legged-Wheeled design robot possesses wheels, as well as legs, thus giving the robot the ability to traverse various terrains.

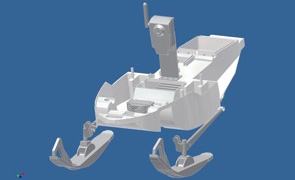

Version 3 (SnoMotes I) and Version 4 (SnoMotes II)

- SnoMotes I

To endow a rover with an inherent all-terrain drive system for navigation in Arctic environments, a 1/10 scale snowmobile chassis was selected for our prototype platform. The platform was modified to include an ARM-based processor running a specialized version of Linux. The motherboard offered several serial standards for communication, in addition to wifi and bluetooth. A daughterboard provided an ADC unit and PWM outputs for controlling servos. The drive system was modified to accept PWM motor speed commands, and the steering control was replaced with a high-torque servo. For ground truth position logging, a GPS unit connects to the processor via the bluetooth interface, while robot state and camera images are sent directly to an external control computer via the wifi link. To simulate the science objectives of a mobile sensor network, a weather- oriented sensor suite was added to the rover. The deployed instrument suite includes sensors to measure temperature, barometric pressure, and relative humidity.SnoMotes II

The main reason tracked vehicles are used for snow traversal is the large area of the track distributes the vehicle weight, allowing it to “float” on the surface. Due to the discovered mobility issues with the original platform, a set of chassis modifications were designed and implemented. The original front suspension mechanism was replaced by a passive double-wishbone system, increasing the ski-base over 30%. The rear track system was replaced with a custom, dual-track design, which both widened the rear footprint and effectively doubled the snow contact surface area. A 500 W brushless motor and high-current speed controller drive the new track system. The overall increase in the platform width drastically improved the platform’s stability and role characteristics.

Field Trials in Alaska (with Version 3 and 4)

- Field trials for the SnoMotes occurred in Juneau, Alaska. Based on the relevance of weather data, the similarity of the terrain to arctic conditions, and logistics, several test sites were selected for field studies across two glaciers. Lemon Creek Glacier has been the subject of annual mass balance measurements since 1953 as part of the Juneau Icefield Research Program (JIRP), making weather measurements in this area particularly relevant. Mendenhall Glacier is one of Alaska’s most popular tourist attractions. The current public interest of this particular site makes additional information valuable. Both sites are only accessible via helicopter. Several test sites were selected across the glaciers in order to test the system in a variety of glacial terrains. One area was completely covered with over a meter of soft snow and largely flat for several kilometers in any direction. Another was located at the lower edge of the northern branch of Mendenhall, near a bend in the glacier. Again, the site is completely snow covered, but is much closer to the mountains. Due to the proximity of the Mendenhall Tower peaks and the bend in the path, the terrain exhibited large-scale undulations. Yet another was located in the upper plateau of the terminus. Here the underlying ice is exposed and the terrain is characterized by small, rolling hills 1-2 meters in height. Some crevasses are present in this area, and melt water pools in some of the small valleys.

Arctic Navigation

- Climate change is one of the major concerns of the

scientific community. As such, scientists are always looking for new ways to

collect weather data to help model and predict the impact of our society on

the global climate. Specifically, weather data collected from arctic regions

is considered valuable, as glacial regions are more sensitive to the effects

of climate change. Recently, scientists have been considering deploying

multiple robotic weather stations to Greenland or Antarctica to aid in this

data collection.

For such a robotic system to be viable, each rover must be able to navigate to a desired location without relying on a human operator. However, glacial terrains present a variety of hazards apart from the obvious temperature extremes. Hard-packed snow dunes and softer snow drifts present steep inclines that must be overcome, vertical cracks in the ice sheet can easily swallow a small rover, and varying lighting conditions in the all-white environment make detecting these hazards difficult.

Nonetheless, current research is focused on developing a vision-based navigation system for arctic robots. Techniques for amplifying subtle terrain texture have proven effective at uncovering potential hazards, and methods for extracting visual landmarks in the snow-covered terrain have enabled rovers to track their progress towards their goal. Currently, methods are being explored to allow the rovers to create a 'mental' 3-D model the terrain. With this model the robots can plan efficient routes to their goal that minimizes traversal through treacherous terrain.

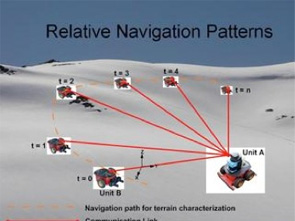

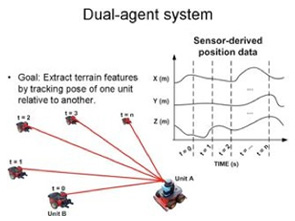

Multi-Agent Terrain Characterization Robot Survey System

- This work encompasses the theme of a robotic surveyor

system capable of traversing harsh, variable terrain and intelligently

discerning the optimal navigation strategy for achieving representative

coverage. The coverage will enable earth scientists to extract more

information about various test sites than is currently allowed and improve

safety by removing the human from potentially dangerous areas of interest.

Traditional surveying techniques typically employ in situ (on ground) and

remote (satellite) measurements, however, a significant amount of error is

introduced at each level. This work has two benefits: 1) to minimize

measurement error by leveraging satellite imagery in order to better

influence the autonomous behavior of a ground-based robotic system and 2) to

expand the allowable area of testing during field campaigns. Both of these

benefits drive the goal of obtaining better representative coverage of

terrain, improving the resolution of information provided to the earth

science community. The primary contribution of this research is identifying

what design considerations must be considered when deploying a system of

this type for such a purpose.

Initial field tests have been conducted in Juneau, AK where accelerometer measurements were collected to confirm orientation information of a single HumAnS SnoMote on Mendenhall and Lemon Creek glaciers. Currently, I am outfitting multiple unmannded ground vehicles with a low-cost sensor suite composed of MEMS accelerometers to obtain critical orientation information