Navigation : EXPO21XX > ROBOTICS 21XX >

H23: Flying Robots Research

> Ecole Polytechnique Fédérale de Lausanne

Ecole Polytechnique Fédérale de Lausanne

Videos

Loading the player ...

- Offer Profile

- The Laboratory of Intelligent Systems

The Laboratory of Intelligent Systems (LIS) directed by Prof. Dario Floreano focuses on the development of robotic systems and artificial intelligence methods inspired by biological principles of self-organization. Currently, we address three interconnected research areas:

Flying Robots

Artificial Evolution

Social Systems

Product Portfolio

Mobile Robotics Research Projects

Bioinspired Vision-based Microflyers

- Taking inspiration from biological systems to enhance navigational autonomy

of robots flying in confined environments.

The goal of this project is to develop control strategies and neuromorphic chips for autonomous microflyers capable of navigating in confined or cluttered areas such as houses or small built environments using vision as main source of information.

Flying in such environments implies a number of challenges that are not found in high-altitude, GPS-based, unmanned aerial vehicles (UAVs). These include small size and slow speed for maneuverability, light weight to stay airborne, low-consumption electronics, and smart sensing and control. We believe that neuromorphic vision chips and bio-inspired control strategies are very promising methods to solve this challenge.

The project is articulated along three, tightly integrated, research directions:

Mechatronics of indoor microflyers (Adam Klaptocz, EPFL);

Neuromorphic vision chips (Rico Möckel, INI);

Insect-inspired flight control strategies (Antoine Beyeler, EPFL).

We plan to take inspiration from flying insects both for the design of the vision chips and for the choice of control architectures. Instead, for the design of the microflyers, we intend to develop innovative solutions and improvements over existing micro-helicopter and micro-airplanes.

Our final goal is to better understand the minimal set of mechanisms and strategies required to fly in confined environments by testing theoretical and neuro-physiological models in our microflyers.A 10-gram microflyer that flies autonomously in a 7x6m test arena

The purpose of this ongoing experiment is to demonstrate autonomous steering of a 10-gram microflyer (the MC2) in a square room with different kind of textures on the walls (the Holodeck). This will first be achieved with conventional linear cameras before migrating towards aVLSI sensors.

Artist's view of the project

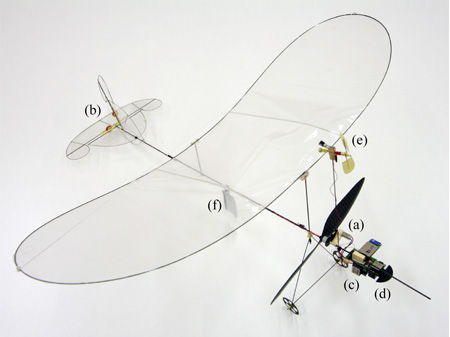

Microflyer

- The MC2 is based on a microCeline, a 5-gram living room flyer produced by DIDEL equipped with a 4mm geared motor (a) and two magnet-in-a-coil actuators (b) controlling the rudder and the elevator (b). When fitted with the required electronics for autonomous navigation, the total weight reaches 10 grams. The custom electronics consists of a microcontroller board (c) featuring a PIC18LF4620 running at 32MHz, a Bluetooth radio module (for parameter monitoring), and two camera modules, which comprise a CMOS linear camera (TSL3301) and a MEMS rate gyros (ADXRS150) each. One of those camera modules (d) is oriented forward with its rate gyro measuring yaw rotations, and will mainly by used for obstacle avoidance. The second camera module (c) is oriented downwards, looking longitudinally at the ground, while its rate gyro measures rotation about the pitch axis. Each of the cameras have 102 gray-level pixels spanning a total field of view of 120°. In order to measure its airspeed, the MC2 is also equipped with an anemometer (e) consisting of a free propeller and a hall-effect sensor. This anemometer is placed in a region that is not blown by the main propeller (a). The 65mAh Lithium-polymer battery (f) ensures an autonomy of approximately 10 minutes.

Analog Genetic Encoding (AGE)

Evolutionary Synthesis and Reverse Engineering of Complex Analog Networks- The synthesis and reverse engineering of analog

networks are recognized as knowledge-intensive activities,

where few systematic techniques exist. Given the importance and

pervasiveness of analog networks, there is a founded interest in the

development of automatic techniques capable of handling both problems.

Evolutionary methods appear as one of the most promising approaches for the

fulfillment of this objective.

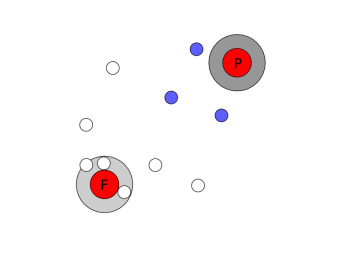

Analog Genetic Encoding (AGE) is a new way to represent and evolve analog networks. The genetic representation of Analog Genetic Encoding is inspired by the working of biological genetic regulatory networks (GRNs). Like genetic regulatory networks, Analog Genetic Encoding uses an implicit representation of the interaction between the devices that form the network. This results in a genome that is compact and very tolerant of genome reorganizations, thus permitting the application of genetic operators that go beyond the simple operators of mutation and crossover that are typically used in genetic algorithms. In particular, Analog Genetic Encoding permits the application of operators of duplication, deletion, and transpositions of fragments of genome, which are recognized as fundamental for the evolution and complexification of biological organisms. The resulting evolutionary system displays state-of-the-art performance in the evolutionary synthesis and reverse engineering of analog networks.

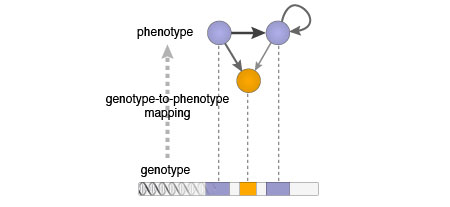

The AGE Genome

- The AGE genome is constituted by one or more strings of characters (called chromosomes) from a finite genetic alphabet. The experimenter defines a device set which specifies the kind of devices that can appear in the network. For example, the device set of an evolutionary experiment aimed at the synthesis of an analog electronic circuit could contain a few types of transistors, and the device set of an evolutionary experiment aimed at the synthesis of a neural network could contain a few types of artificial neuron models. The experimenter specifies also the number of terminals of each kind of device. For example, a bipolar transistor has three terminals, a capacitor has two terminals, and an artificial neuron could be specified as having one output terminal and one input terminal. The AGE genome contains one gene for each device that will appear in the network decoded from the genome, as shown in the figure

Decoding the AGE Genome

- Analog Genetic Encoding specifies the regions of the genome which correspond to the devices and to their terminals and parameters by means of a collection of specific sequences of characters that we call tokens. One specific device token is defined by the experimenter for each element of the device set. The device token signals the start of a fragment of genome that encodes an instance of the corresponding device. The experimenter defines also a terminal token, which delimits the sequences of characters that are associated with the terminals. The interaction between genes is represented in terms of a device interaction map I, which transforms pairs of character sequences associated with two distinct device terminals, into a numeric value that characterizes the link connecting the two terminals. The final result is an analog network decoded from the genome, as shown in the animation

ECAgents: Embodied and Communicating Agents

- ECAgents is a transdisciplinary European research

project. Its goal is to provide a better understanding of the role of

communication in collections of embodied and situated agents (real and

simulated robots). This project involves people from different fields such

as computer science, robotics, biology, physics and mathematics.

Our contribution to the ECAgent project

Our work focuses on the prerequisites for communication, which need to be in place before embodied agents can start bootstraping communication systems of any complexity. These prerequisites depend on the complexity of the agents, the complexity of the environment, and the complexity of the agent's set tasks.

We use artificial evolution to search for the emergence of communication and neural networks as the underlying agent control mechanism.

Specifically, we are exploring the following prerequisites :- The dynamic of the environment. For instance, an agent should look for food, but food placement as well as food caracteritics, such as colour, are dynamic. Some potential food sources in the world are "good", but others are "bad". The agents need to explore and report their discovery to other agents in order to maximise the group fitness.

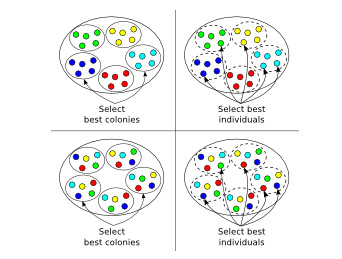

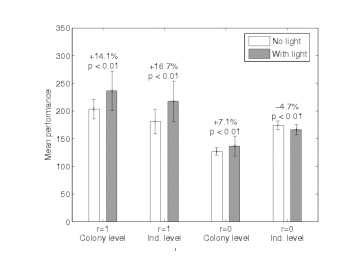

- The genetic relatedness and the level of selection. Does a homogenous group perform better then a heterogenous one? Is it better to do individual or group selection? Can we find a general principle out of different experiments involving different tasks and environment dynamics? This work is done in collaboration with the EvoAnts projects project.

- The neural network architecture. To which point can we go without a hidden layer? Is it necessary to balance sensor modality weight (preprocess vision because of its several pixels)? Is memory (recurent neurons) mandatory? And if yes, how many, with which connections?

- The communication medium structure. Is one medium sufficient (like vision)? Is it necessary to have differents channels of differents properties (like sound and vision)? Is local communication mandatory?

Our agents are S-bots, which were created as part of the Swarmbot project. Both simulated and real one are used.

Progress

We are exploring and analysing the bootstraping of communication with several starting conditions, neural architectures and evolutionary conditions using virtual s-bots in Enki.

We are porting some of our experiments to the real s-bot robots.

We are also exploring what are the mechanisms to provide a smooth path for evolution of signaling.

To conduct our experiments, we have developed a fast 2D physics-based simulator and an evolutionary framework. Both are open-source.

s-bots

- The s-bots have a diameter of 12 cm and a height of 15cm and possess 2 Lilon batteries, which give it about an hour of autonomy. A 400 MHz custom Xscale CPU board with 64 MB of RAM and 32 MB of flash memory is used for processing, as well as 12 distributed PIC microcontroller for low-level handling.

- Fig. 1. Left: Four conditions tested in our experiments.

- Fig. 1. Right: Comparison of mean performance with and without communication in the four cases.

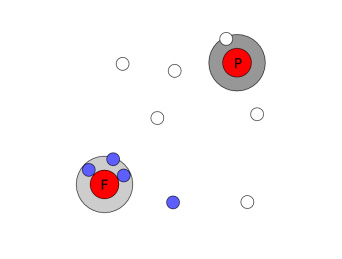

- Fig. 2. Left: Evolved food signalling strategy.

- Fig. 2. Right: Evolved poison signalling strategy

Swarm-bots project

- The goal of this project is the study of a novel

design approach to hardware implementation for testing and using the

capability of self-assembling, self-organising, and metamorphosis of robotic

systems called SWARM-BOTS. Such an approach finds its theoretical roots on

recent studies in swarm intelligence, i.e., in studies of self-organising

and self-assembling capabilities shown by social animals (see figure 1).

An important part of the project consists in the physical construction of at least one swarm-bot, that is, a self-assembling and self-organising robot colony made of a number (30-35) of smaller devices, called s-bots. Each s-bot is a fully autonomous mobile robot capable of performing basic tasks such as autonomous navigation, perception of its surrounding environment, and grasping of objects. A s-bot is also thought to be able to communicate with other peer units and physically join either rigidly or flexibly to them, thus forming a swarm-bot. A swarm-bot is supposed to be capable of performing exploration, navigation and transportation of heavy objects on very rough terrains, especially when a single s-bot has major problems at achieving the task alone. The hardware structure is combined with a distributed adaptive control architecture inspired upon ant colony behaviors.

An s-bot is shown in figures 2 and 3. As can be seen there, the mobility is ensured by a track system. Each track is controlled by a motor so that a robot can freely move in the environment and rotate on the spot.

These tracks allow each s-bot to move even on moderately rough terrain, with more complex situations being addressed by swarm-bot configurations.

The motor base with the tracks can rotate with respect to the main body by means of a motorized axis.

S-bots can connect to each other with two types of possible physical interconnections: rigid and semi-flexible.

Rigid connections between two s-bots are implemented by a gripper mounted on a horizontal active axis. This gripper has a very large acceptance area that can securely grasp at any angle and lift (if necessary) another s-bot.

Semi-flexible connections are implemented by flexible arms actuated by three motors positioned at the point of attachement on the main body. The three degrees of freedom allow to move the arm letrally and vertically as well as extend and retract it.

Using rigid and flexible connections, s-bots can form a swarm-bots having 1D or 2D structures that can bend and take 3D shapes.

Rigid and flexible connections have complementary roles in the functioning of the swarm-bot. The rigid connection is mainly used to form rigid chains that have to pass large gaps, as illustrated in Figure 5.

The flexible connection is adapted for configurations where each robot can still have its own mobility inside the structure. The swarm-bot can of course also have mixed configurations, including both rigid and flexible connections, as illustrated in figure 4.

Potential application of this type of swarm robotics are, for instance, semi-automatic space exploration, search for rescue or underwater exploration.

We now have two functional prototypes. We are working on some behaviours, using a single robot or even with more then one robot.

On the software side, the XScale processor board is running Familiar/GNU/Linux with wireless ethernet.

We are also syncing the real datas with the simulator so that a simulated behaviour can easily be ported on the real robot.

Swarm-bots

- The s-bots have a diameter of 12 cm and a height of 15cm and possess 2 Lilon batteries, which give it about an hour of autonomy. A 400 MHz custom Xscale CPU board with 64 MB of RAM and 32 MB of flash memory is used for processing, as well as 12 distributed PIC microcontroller for low-level handling.

- Figure 1: S-bot prototype.

- Fig. Left: The rigid connection can be used to form chains and pass very big obstacles and large gaps.

- Fig. Right: Swarm-bot robot configuration to pass a large gap.

The Eyebots

'A new swarm of indoor flying robots capable of operating in synergy with swarms of foot-bots and hand-bots'- Introduction:

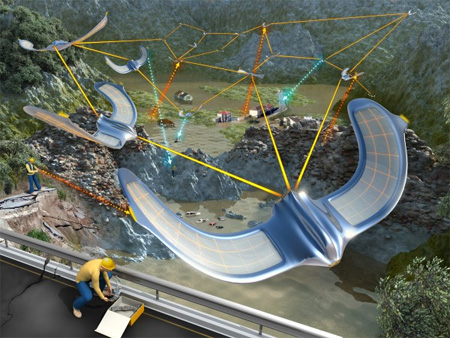

Eyebots are autonomous flying robots with powerful sensing and communication abilities for search, monitoring, and pathfinding in built environments. Eyebots operate in swarm formation, as honeybees do, to efficiently explore built environments, locate predefined targets, and guide other robots or humans (figure 1).

Eyebots are part of the Swarmanoid, a European research project aimed at developing an heterogeneous swarm of wheeled, climbing, and flying robots that can carry out tasks normally assigned to humanoid robots. Within the Swarmanoid, Eyebots serve the role of eyes and guide other robots with simpler sensing abilities.

Eyebots can also be deployed on their own in built environments to locate humans who may need help, suspicious objects, or traces of dangerous chemicals. Their programmability, combined with individual learning and swarm intelligence, makes them rapidly adaptable to several types of situations that may pose a danger for humans.

Eyebots are currently under development at LIS, EPFL. We will post additional information on this site as soon as technical documents will be available for public disclosure.

- Figure 1 Left: The Eyebots - artistic impression of the eye-bots used in an airport

- Figure 1 Right: The Eyebots - artistic impression of the eye-bots used in an urban house

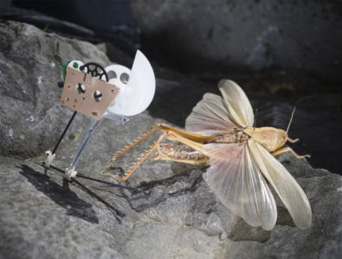

Self Deploying Microglider

Developing a hybrid robotic vehicle capable of deploying itself into the air and perform goal directed gliding- Gliding flight is powerful -to overcome obstacles and

travel from A to B.

It can be applied in miniature robotics as a very versatile and easy to use locomotion method. In this project we aim at developing a palm sized Microglider that possesses the ability to deploy from ground or walls, to then open its wings, recover from almost every position in mid-air and perform subsequent goal directed gliding.

A potential source of inspiration on how to accomplish this task efficiently is nature. In the animal kingdom, many small animals are able to get into the air by jumping, fast running or by dropping down from trees. Once air-borne, they recover and stabilize passively or actively and perform goal directed aerial descent (e.g. gliding frogs, flying geckos, gliding lizards, locusts, crickets, flying squirrels, gliding fish, gliding ants etc.). These animals do not use steady state gliding, but change their velocity and angle of attack dynamically during flight to optimize the trajectory in order to increase the gliding ratio or land on a spot. The same principles may be advantageous for small aerial robots as well.

The critical issues on the path towards the realization of an efficient deploying Microglider at this scale are (i) the trade-off between passive stability, maneuverability and maximal gliding ratio, (ii) the low Reynolds number (<10'000) that leads to increased influence of boundary layer effects and renders the applicability of the conventional and well known large scale aerodynamics impossible and (iii) the control of the unsteady dynamics during recovery and flight.

The work in progress addresses these aspects. Embedded mechanisms for autonomous deployment from ground or walls into the air will be considered at the next stage.

A miniature 7g jumping robot

- Jumping can be a very efficient mode of locomotion for small robots to overcome large obstacles and travel in natural, rough terrain. As the second step towards the realization of the the Self Deploying Microglider, we present the development and characterization of a novel 5cm, 7g jumping robot. It can jump obstacles as high as more than 24 times its own size and outperforms existing jumping robots with respect to jump height per weight and jump height per size. It employs elastic elements in a four bar linkage leg system to allow for very powerful jumps and adjustment of jumping force, take off angle and force profile during the acceleration phase.

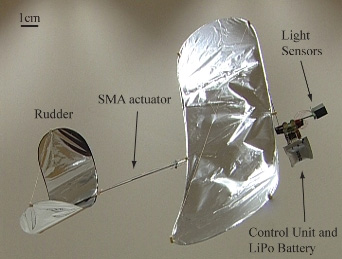

A 1.5g SMA actuated Microglider looking for the Light

- As a first step towards the exploration of gliding as an alternative or complementary locomotion principle in miniature robotics, we developed a 1.5g ultra light weight microglider. It is equipped with sensors and electronics to achieve phototaxis (flying towards the light), which can be seen as a minimal level of control autonomy. To characterize autonomous operation of this robot, we developed an experimental setup consisting of a launching device and a light source positioned 1m below and 4m away with varying angles with respect to the launching direction. Statistical analysis of 36 autonomous flights indicate its flight and phototaxis efficiency.

Body Sensing

An adaptive wearable device for monitoring sleep and preventing fatigue- Fatigue is a major source of stress and accidents in

today's world, but there are no objective ways of monitoring and preventing

the build-up of fatigue.

Sleep and wake periods are major factors, but not the only ones, that contribute to regulate the onset of fatigue. In this project, we start by developing a non-intrusive, wearable device for monitoring sleep and wake phases.

Since body signals related to sleep and wake are different from person to person, our device incorporates learning technologies adapted from our work on autonomous robotics. This allows the device to self-tune to the user.

The output of the sleep/wake device will then be incorporated into a model of fatigue that takes into account also other body signals and can adapt to the style and physiology of the user.

A version of the sleep/wake device will be tested within the framework of Solar Impulse, where the pilot has to be alert during the entire flight, which can take up to five days and nights. Our device can be used to predict the pilots fatigue and to calculate his optimal break times, always taking into account the mission status.

- Pilot Donna - Body Sensing, artistic impression

- The Solar Impulse plane flying above the EPFL campus (fotomontage)

Active Vision Project

- Coevolution of Active Vision and Feature Selection

We show that the co-evolution of active vision and feature selection can greatly reduce the computational complexity required to produce a given visual performance. Active vision is the sequential and interactive process of selecting and analyzing parts of a visual scene. Feature selection instead is the development of sensitivity to relevant features in the visual scene to which the system selectively responds. Each of these processes has been investigated and adopted in machine vision. However, the combination of active vision and feature selection is still largely unexplored.

In our experiments behavioral machines equipped with primitive vision systems and direct pathways between visual and motor neurons are evolved while they freely interact with their environments. We describe the application of this methodology in three sets of experiments, namely, shape discrimination, car driving, and robot navigation. We show that these systems develop sensitivity to a number of oriented, retinotopic, visual-feature-oriented edges, corners, height, and a behavioral repertoire. This sensitivity is used to locate, bring, and keep these features in particular regions of the vision system, resembling strategies observed in simple insects.

Active Vision and Receptive Field Development

In this project we went one step further and investigated the ontogenetic development of receptive fields in an evolutionary mobile robot with active vision. In contrast to the previous work where synaptic weights for both receptive field and behavior were genetically encoded and evolved on the same time scale, here the synaptic weights for receptive fields develop during the life of the individual. In these experiments, behavioral abilities and receptive fields develop on two different temporal scales, phylogenetic and ontogenetic respectively. The evolutionary experiments are carried out in physics-based simulation and the evolved controllers are tested on the physical robot in an outdoor environment.

Such a neural architecture with visual plasticity for a freely moving behavioral system also allows us to explore the role of active body movement in the formation of the visual system. More specifically we study the development of visual receptive fields and behavior of robots under active and passive movement conditions. We show that the receptive fields and behavior of robots developed under active condition significantly differ from those developed under passive condition. A set of analyses suggest that the coherence of receptive fields developed in active condition plays an important role in the performance of the robot.

Omnidirectional Active Vision

The omnidirectional camera is a relatively new optic device that provides a 360 degrees field of view, and it has been widely used in many practical applications including surveillance systems and robot navigation. However, in most applications visual systems uniformly process the entire image, which would be computationally expensive when detailed information is required. In other cases the focus is determined for particular uses by the designers or users. In other words, the system is not allowed to freely interact with the environment and selectively choose visual features.

Contrarily, all vertebrates and several insects -- even those with a very large field of view -- share the steerable eyes with a foveal region, which means that they have been forced to choose necessary information from a vast visual field at any given time so as to survive. Such a sequential and interactive process of selecting and analyzing behaviorally-relevant parts of a visual scene is called active vision.

In this project we explore omnidirectional active vision: coupled with an omnidirectional camera, a square artificial retina can immediately access any visual feature located in any direction, which is impossible for the conventional pan-tilt camera because of the mechanical constraints. It is challenging for the artificial retina to select behaviorally-relevant features in such a broad field of view.

Active Vision for 3D Landmark-Navigation

Active vision may be useful to perform landmark-based navigation where landmark relationship requires active scanning of the environment. In this project we explore this hypothesis by evolving the neural system controlling vision and behavior of a mobile robot equipped with a pan/tilt camera so that it can discriminate visual patterns and arrive at the goal zone. The experimental setup employed in this article requires the robot to actively move its gaze direction and integrate information over time in order to accomplish the task. We show that the evolved robot can detect separate features in a sequential manner and discriminate the spatial relationships. An intriguing hypothesis on landmark-based navigation in insects derives from the present results.

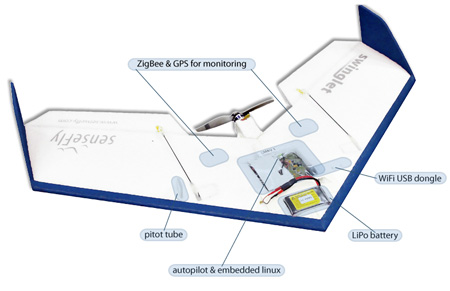

The SMAVNET project

Swarming Micro Air Vehicle Networks for Communication Relay- Big Picture

The SMAVNET project aims at developing swarms of flying robots that can be deployed in disaster areas to rapidly create communication networks for rescuers. Flying robots are interesting for such applications because they are fast, can easily overcome difficult terrain, and benefit from line-of-sight communication.

To make aerial swarming a reality, robots and controllers need to be made as simple as possible.

From a hardware perspective, robots are designed to be robust, safe, light-weight and low-cost. Furthermore, protocols and human-swarm interfaces are developed to allow non-experts to easily and safely operate large groups of robots.

From a software perspective, controllers allow flying robots to work together. For swarming, robots react to wireless communication with neighboring robots or rescuers (communication-based behaviors). Using communication as a sensor is interesting because most flying robots are generally equipped with off-the-shelf radio modules that are low-cost, light-weight and relatively long-range. Furthermore, this strategy alleviates the need for position which is required for all existing aerial swarm algorithms and typically requires using sensors that depend on the environment (GPS, cameras) or are expensive and heavy (lasers, radars).

Robot

- Flying Robots were specifically designed for safe,

inexpensive and fast prototyping of aerial swarm experiments.

They are light weight (420 g, 80 cm wingspan) and built out of Expanded Polypropylene (EPP) with an electric motor mounted at the back and two control surfaces serving as elevons (combined ailerons and elevator). The robots runs on a LiPo battery and have an autonomy of 30 min. They are equipped with an autopilot for the control of altitude, airspeed and turn rate. Embedded in the autopilot is a micro-controller that runs a minimalist control strategy based on input from only 3 sensors: one gyroscope and two pressure sensors.

Swarm controllers are implemented on a Toradex Colibri PXA270 CPU board running Linux, connected to an off-the-shelf USB WiFi dongle. The output of these controllers, namely a desired turn rate, speed or altitude, is sent as control command to the autopilot.

In order to log flight trajectories, the robot is further equipped with a u-blox LEA-5H GPS module and a ZigBee (XBee PRO) transmitter.

Swarm Algorithms

- Designing swarm controllers is typically challenging

because no obvious relationship exists between the individual robot

behaviors and the emergent behavior of the entire swarm. For this reason, we

turn to biology for inspiration.

In a first approach, artificial evolution is used for its potential to automatically discover simple and unthought-of robot controllers. Good evolved controllers are then reverse-engineered so as to capture the simple and efficient solutions found through evolution in hand-designed controllers that are easy to understand and can be modeled. Resulting controllers can therefore be adapted to a variety of scenarios in a predictable manner. Furthermore, they can be extended to accommodate entirely new applications. Reverse-engineered controllers demonstrate a variety of behaviors such as exploration, synchronization, area coverage and communication relay.

In a second approach, inspiration is taken from ants that can optimally deploy to search for and maintain pheromone paths leading to food sources in nature. This is analogous to the deployment and maintenance of communication pathways between rescuers using the SMAVNET.

Swarm Setup

- All necessary software and hardware to perform

experiments with 10 flying robots was developed in the scope of this

project. To the best of our knowledge, this setup is the one with the most

flying robots operating outdoors to this day.

For fast deployment of large swarms, input from the swarm operator must be reduced to a minimum during robot calibration, testing and all phases of flight (launch, swarming, landing). Therefore, robot reliability, safety and autonomy must be pushed to a maximum so that operators can easily perform experiments without safety pilots. In our setup, robots auto-calibrate and perform a self-check before being launched by the operator. Robots can be monitored and controlled though a swarm-interface running on a single computer.

The critical issue of operational safety has been addressed by light-weight, low-inertia platform design and by implementing several security features in software. Among other things, we looked at mid-air collision avoidance using local communication links and negotiation of flight altitudes between robots. By providing a risk analysis for ground impact and mid-air collisions to the Swiss Federal Office for Civil Aviation (FOCA), we obtained an official authorization for beyond-line-of-sight swarm operation at our testing site.

Bio-inspired Vision-based Flying Robots

Applying bio-inspired methods to indoor flying robots for autonomous vision-based navigation.- Robotic vision opens the question of how to use

efficiently and in real time the large amount of information gathered

through the receptors. The mainstream approach to computer vision based on a

sequence of pre-processing, segmentation, object extraction, and pattern

recognition of each single image is not viable for behavioral systems that

must respond very quickly in their environments. Behavioral and energetic

autonomy will benefit from light-weight vision systems tuned to simple

features of the environment.

In this project, we explore an approach whereby robust vision-based behaviors emerge out of the coordination of several visuo-motor components that can directly link simple visual features to motor commands. Biological inspiration is taken from insect vision and evolutionary algorithms are used to evolve efficient neural networks. The resulting controllers select, develop, and exploit visuo-motor components that are tailored to the information relevant for the particular environment, robot morphology, and behavior.

Evolving Neural Network for Vision-based Navigation

The story started with a non-flying robot. Floreano et al. (2001) demonstrated the ability of an evolved spiking neural network to control a Khepera for smooth vision-based wandering in an arena with randomly sized black and white patterns on the walls. The best individuals were capable of moving forward and avoiding walls very reliably. However, the complexity of the dynamics of this terrestrial robot is much simpler than that of flying devices, and we are currently exploring whether that approach can be extended to flying robots.

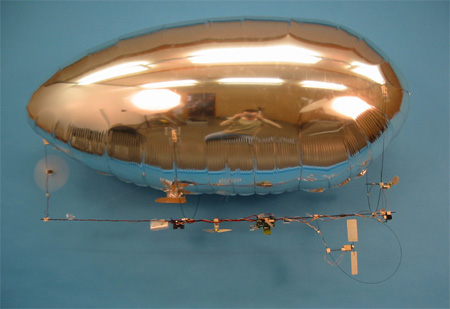

Evolution Applied to Physical Flying Robots: the Blimp

- Evolving aerial robots brings a new set of challenges. The major issues of developing (evolving, e.g. using goevo) a control system for an airship, with respect to a wheeled robot, are (1) the extension to three dimensions, (2) the impossibility to communicate to a computer via cables, (3) the difficulty of defining and measuring performance, and (4) the more complex dynamics. For example, while the Khepera is controlled in speed, the blimp is controlled in thrust (speed derivative) and can slip sideways. Moreover, inertial and aerodynamic forces play a major role. Artificial evolution is a promising method to automatically develop control systems for complex robots, but it requires machines that are capable of moving for long periods of time without human intervention and withstanding shocks.

- Those requirements led us to the development of the Blimp 2 shown in the pictures. All onboard electronic components are connected to a microcontroller with a wireless connection to a desktop computer. The bidirectional digital communication with the desktop computer is handled by a Bluetooth radio module, allowing more than 15 m range. The energy is provided by a Li-Poly battery, which lasts more than 3 hours under normal operation, during evolutionary runs with goevo. For now, a simple linear camera is attached in front of the gondola, pointing forward. We are currently working on other kinds of micro-cameras. Other embedded sensors are an anemometer for fitness evaluation, a MEMS gyro for yaw rotation speed estimate, and a distance sensors for altitude measurements.

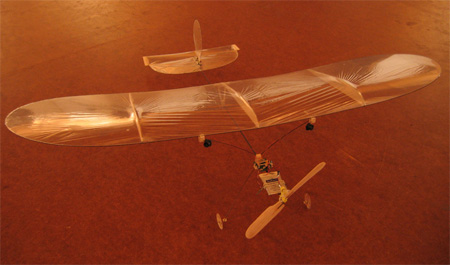

Final Goal: an Autonomous Vision-based Indoor Plane

- In order to further demonstrate this concept, we chose

the indoor slow flyers as a well-suited test-bed because of the need for

very fast reactions, low power consumption, and extremely lightweight

equipment. The possibility of flying indoor simplifies the experiments by

avoiding the effect of the wind and the dependence on the weather and allows

for modifying as needed the visual environment. Our new model F2 (picture on

the left) has the following characteristics: bi-directional digital

communication using Bluetooth, overall weight of 30 g, 80 cm wing span, more

than 20 minutes autonomy, 1.1 m/s minimum flight speed, minimum space for

flying of about 7x7 meters, 2 or 3 linear cameras, 1 gyro, 1 2-axis

accelerometer).

With respect to the Blimp, that kind of airplanes are slightly faster and have 2 more degrees of freedom (pitch and roll). Moreover, they are not able to be evolved in a room. Therefore are we currently working on a robotic flight simulator (see below). Both physical and simulated indoor slow flyers are compatible with goevo.

**Initial experiments using optic-flow without evolutionary methods have been carried out to demonstrate vision-based obstacle avoidance with a 30-gram airplane flying at about 2m/s (model F2, see pictures on the left). The experimental environment is a 16x16m arena equipped with textured walls.

The behavior of the plane is inspired from that of flies (see Tammero and Dickinson, The Journal of Experimental Biology 205, pp. 327-343, 2002). The ultra-light aircraft flies mainly in straight motion while using gyroscopic information to counteract small perturbations and keep its heading. Whenever frontal optic-flow expansion exceeds a fixed threshold it engages a saccade (quick turning action), which consists in a predefined series of motor commands in order to quickly turn away from the obstacle (see video below). The direction (left or right) of the saccade is chosen such to turn away from the side experiencing higher optic-flow (corresponding to closer objects).

Two horizontal linear cameras are mounted on the wing leading edge in order to feed the optic-flow estimation algorithm running in the embedded 8-bit microcontroller. The heading control including obstacle avoidance is thus truly autonomous, while an operator only controls the altitude (pitch) of the airplane via a joystick and a Bluetooth communication link.

So far, the 30-gram robot has been able to fly collision-free for more than 4 minutes without any intervention regarding its heading. Only 20% of the time was engaged in saccades, which indicates that the plane flew always in straight trajectories except when very close to the walls. During those 4 minutes, the aerial robot generated 50 saccades, and covered about 300m in straight motion.

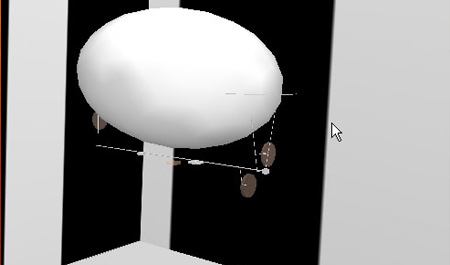

Robotic Flight Simulator

- A flight simulator based on Webots4 helps us to speed up

evolutionary runs and rapidly (up to 10x faster) test new ideas. Using

OpenGL and ODE (Open Dynamics Engine), Webots4 is able to accurately

simulate 3D motion with physical effects like gravity, inertia, shocks,

friction. Our blimp dynamical model includes buoyancy, drag, Coriolis and

added mass effects (cf. Webots official distribution for a simplified

example of this model). So far, we were able to demonstrate very good

behavioral correspondence between simulated Blimp 2b and its physical

counterpart (see movies below) when evolved with goevo.

At the moment of writing, a simple model of our indoor slow flyer is under development. Our plan is to have evolution taking place in simulation and the best-evolved controllers being used to form a small population to be incrementally evolved on the physical airplane with human assistance in case of imminent collision. However, we anticipate that evolved neural controllers will not transfer very well because the difference between a simulated flyer and a physical one is likely to be quite large. This issue will probably be approached by evolving hebbian-like synaptic plasticity, which we have shown to support fast self-adaptation to changing environments (cf. Urzelai and Floreano, 2000).