- Offer Profile

Embodied Intelligence means that an intelligent agent that has a body. Evidence of biological mental development has shown that an active body is indispensable for acquisition of intelligence.

Our research areas include, but not limited to: human-computer interactions; image and signal processing; pattern recognition; computer vision; content-based information retrieval; speech recognition; tactile recognition; machine learning; biologically motivated models of cognition, learning, and development; autonomous navigation; language processing and understanding; reasoning; action; decision making, intelligent robots (construction, control and training) and artificial intelligence.

Developmental Robots and Autonomous Mental Development

- Mental development

This line of research is to advance artificial intelligence using what we call the developmental approach. This new approach is motivated by human cognitive and behavioral development from infancy to adulthood. It requires a fundamentally different way of addressing the issue of machine intelligence. We have introduced a new kind of program: a developmental program. A robot that develops its mind through a developmental program is called a developmental robot. SAIL is the name of our first prototype of a developmental robot. It is a "living" machine. Dav is the next generation after SAIL.The concept of a developmental program does not mean just to make machines grow from small to big and from simple to complex. It must enable the machine to learn new tasks that a human programmer does not know about at the time of programming. This implies that the representation of any task that the robot learns must be generated by the robot itself, a well known holy grail in AI and fundamental for machine understanding.

In contrast with traditional thoughts that artificial intelligence should be studied within a narrow scope and that otherwise the complexity is out of control, the developmental approach aims to provide a broad and unified developmental framework, which is applicable to a wide variety of perceptual capabilities (e.g., vision, audition and touch), cognitive capabilities (e.g., situation awareness, language understanding, reasoning, planning, communication, decision making, task execution), behavioral capabilities (e.g., speaking, dancing, walking, playing music), motivational capabilities (e.g., pain avoidance, pleasure seeking, what is right and what is wrong) and the fusion of these capabilities. By the very nature of autonomous development, a developmental program does not require humans to manually model task-specific representation. Some recent evidence in neuroscience has suggested that the developmental mechanisms in our brain are probably very similar across different sensing modalities. This is good news since it means that the task of designing a developmental program is probably more tractable than traditional task-specific programming.

Eight requirements for practical AMD

A developmental robot that is capable of practical autonomous mental development (AMD) must deal with the following eight requirements:- Environmental openness: Due to the task-nonspecificity, AMD must deal with unknown and uncontrolled environments, including various human environments.

- High-dimensional sensors: The dimension of a sensor is the number of scalar values per unit time. AMD must directly deal with continuous raw signals from high-dimensional sensors (e.g., vision, audition and taction).

- Completeness in using sensory information. Due to the environmental openness and task nonspecificity, it is not desirable for a developmental program to discard, at the program design stage, sensory information that may be useful for some future, unknown tasks. Of course, its task-specific representation autonomously derived after birth does discard information that is not useful for a particular task.

- Online processing: At each time instant, what the machine will sense next depends on what the machine does now.

- Real-time speed: The sensory/memory refreshing rate must be high enough so that each physical event (e.g., motion and speech) can be temporally sampled and processed in real time (e.g., about 15Hz for vision). This speed must be maintained even when a full (very large but finite) physical "machine brain size'' is used. It must handle one-instance learning: learning from one instance of experience.

- Incremental processing: Acquired skills must be used to assist in the acquisition of new skills, as a form of ``scaffolding.'' This requires incremental processing. Thus, batch processing is not practical for AMD. Each new observation must be used to update the current complex representation and the raw sensory data must be discarded after it is used for updating.

- Perform while learning: Conventional machines perform after they are built. An AMD machine must perform while it ``builds'' itself "mentally.''

- Scale up to large memory: For large perceptual and cognitive tasks, an AMD machine must handle multimodal contexts, large long-term memory and generalization, and capabilities for increasing maturity, all in real time speed.

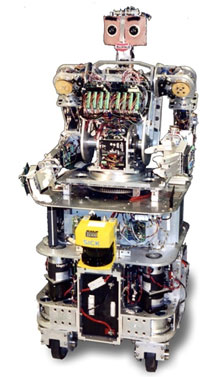

SAIL

-

- 13 DOF

- Main CPU Dual Pentium IV

2.1 GHZ

50 Giga SCSI drives,

1 Giga memory - Two CCD color cameras,

auditory sensor, force sensors - SAIL developmental

program for autonomous

mental development - 202 Kg

Dav

-

- 48 DOF

- Main CPU Quad Pentium, 2 Giga memory,

100 Giga SCSI drives - 11 Embedded processors

- Two color CCD cameras, auditory, laser range scanner, touch sensors

- Wireless Internet

- Autonomous Mental Development

- 242 Kg

DARPA Microrobot Project - Reconfigurable Adaptable MicroRobots

- The goal of this project is to design, build, and test

a prototype of micro-robot that will exhibit multiple forms of locomotion.

This will be achieved through suction cups located at the robot extremities.

The suction cups also provide the ability to climb walls and flip over

obstacles. The robot will be able to work individually or team up with other

robots to perform a given mission. This research and development effort are

being conducted by an interdisciplinary group of faculty from the

Departments of Mechanical Engineering (Ranjan Mukherjee), Electrical

Engineering (R. Lal Tummala and Ning Xi), and Computer Science (Sridhar

Mahadevan and Juyang (John) Weng).

Accomplished Milestones- Design and test robot locomotion mechanism

- Fabricate mechanical structure, sensors, and suction cups

- Demonstrated wall climbing behavior

- Demonstrated its usefullness as as remote sensor (camera) for reconnaissance applications

- Demonstrated cooperative behavior on Khepera robots

Short-Term Milestones

- Demonstrate sliding and flipping modes of locomotion

- Demonstrate wireless operation

- Validate sensor-based learning of individual behaviors

- Fabricate robot prototypes

- Demonstrate cooperative behavior using several robots

SHOSLIF for Multimodal Learning

- Comprehensive sensory learning is the treatment of theories and techniques for computer systems to automatically learn to understand comprehensive visual, auditory and other sensory information with minimal human-imposed rules about the world. The concept of comprehensive learning here implies two coverages: comprehensive coverage of the sensory world and comprehensive coverage of the recognition algorithm. The SHOSLIF is a framework aiming to provide a unified theory and methodology for comprehensive sensor-actuator learning. Its objective is not just to attack a particular sensory or actuator problem, but a variety of such problems. It addresses critical problems such as how to automatically select the most useful features; how to automatically organize sensory and control information using a coarse-to-fine space partition tree which results in a very low, logarithmic time complexity for content-based retrieving from a large visual knowledge base, how to handle invariance based on learning, how to enable on-line incremental learning, how to conduct autonomous learning etc.

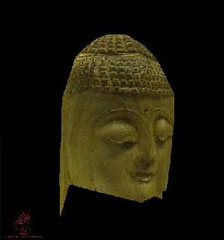

The Camera-Aided Virtual Reality Builder

- Creation of realistic natural texture-mapped 3-D

objects is very tedious using conventional computer graphics techniques. The

user normally constructs a 3-D shape of the object through a computer-aided

design process and then the texture patches are selected by hand and mapped

onto the object surface manually, piece by piece. Or, there are some systems

which scan a subject using laser to obtain the range map as well as surface

reflectance map of the subject, but these systems are very expensive and are

intrusive to human and animal subjects, and they do not work for large

objects, such as buildings, and fail on dark or specular surfaces. The real

take-off of virtual reality (VR) in this age of multimedia requires a

virtual reality builder that is easy to use, inexpensive, and gives

realistic results. The Camera Aided Virtual Reality Builder (CAVRB) just

needs a few images of the object to be modeled. It computes the 3-D shape of

an object from these images using matched object points specified by the

user through an intuitive, graphics-based user interface. The object points

are grouped together as polygonal or bezier surface patches and are

reconstructed in 3-D space.The natural textures are then mapped on to the

3-D shape directly from those images and the 3-D scenes can also be edited

to improve the features. With the CAVRB, it is very convenient to build

realistic VR scenes or objects from real objects, or to incorporate

additional VR objects into VR scenes constructed using existing methods.

Virtually any real-world object can be converted into a VR object using the

CAVRB, such as human faces, human bodies, buildings, trees, gardens, cars,

machine parts, geological terrains, interior of a room, furniture, etc.

Advantages include: (1) Easier to use. The system does not require the user to provide the 3-D shape of the object. (2) Lower in cost. The system uses images taken by commercially available CCD video or still cameras rather than an expensive computer aided design system or a laser scanner. Both of these require experts to run, and the laser scanners are limited in applicability. (3) Realistic result. The texture mapped directly from camera images gives human observers a very realistic impression because the ability of the human vision system to perceive 3-D shape and surface material from shading, specularity, texture, and texture variation. (4) The resulting VR object is truly navigable and compact in storage. Some technology requires multiple views for each hot spot (viewing position) and the user stitches these images into a panoramic screen for each hot spot. The observer can only view the object by jumping from one hot spot to next, but cannot view between any two hot spots or beyond. Such a hot-spot-based representation is redundant for those surface patches that are visible from more than one hot spot.

- One of eight photographs used in constructing the Model

- Snapshots of re-constructed 3-D model