Navigation : EXPO21XX > AUTOMATION 21XX >

H05: Universities and Research in Robotics

> Université de Sherbrooke

Université de Sherbrooke

Videos

Loading the player ...

- Offer Profile

- IntRoLab is a research laboratory pursuing the goal of studying, developing, integrating and using mechatronics and artificial intelligence methodologies for the design of autonomous and intelligent systems. Research activities involve software and hardware design of mobile robots, embedded systems and autonomous agents, and in-situ evaluation. Research conducted at the lab is pragmatic, oriented toward overcoming the challenges associated with making robots and intelligent systems usable in real life situations to improve life quality, and also discover how to give intelligence to machines. Application areas are service robots, all-terrain robots, interactive robots, assistive robotics, telehealth robotics, automobile and surgical robots

Product Portfolio

MECHATRONIC

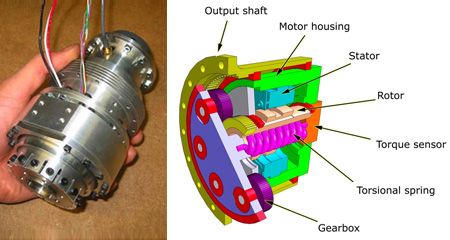

DEA

Differential-Elastic Actuator- The implementation of machines able to precisely control interaction with its environment begins with the use of actuators specially designed for that purpose. To that effect, a new compact implementation design for high performance actuators that are especially adapted for integration in robotic mechanisms has been developed. This design makes use of a mechanical differential as central element. Differential coupling between an intrinsically high impedance transducer and an intrinsically low impedance spring element provides the same benefits as serial coupling. However differential coupling allows new interesting design implementations possibilities, especially for rotational actuators.

DDRA

Dual Differential Rheological Actuator- Robotic systems are increasingly moving out of factories,

stepping into a dynamic world full of unknowns, where they must interact in

a safe and versatile manner. Traditional actuation schemes, which rely on

position control and stiff actuators, often fail in this new context. There

have been many attempts to modify them by adding a full suite of force and

position sensors and by using new control algorithms but, in most cases, the

naturally high output inertia and the internal transmission nonlinearities

such as friction and backlash remain quite burdensome.

The proposed actuation scheme addresses many of those limitations. The DDRA uses a differentials mechanism and two magnetorheological brakes coupled to, for example, an electromagnetic motor. This configuration enables the DDRA to act as a high bandwidth, very low inertia, very low friction and without backlash torque source that can be controlled to track any desired interaction dynamics. The advantages include safety and robustness due to extreme backdrivability and a lot of versatility in interactions. In a more traditional context, the actuator’s low inertia, eliminated backlash and reduced nonlinearities allow for greater accelerations and a more precise positioning, thus improving productivity and quality.

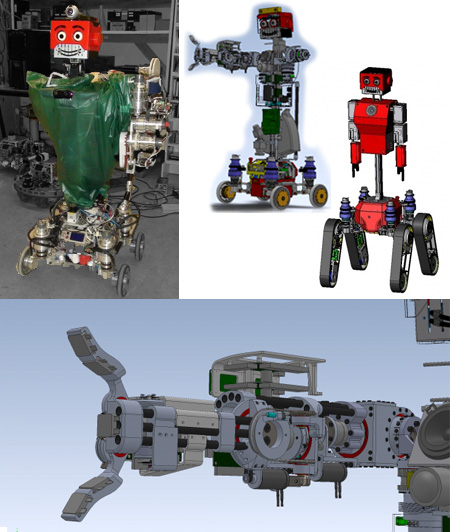

AZIMUT

Omnidirectional Robotic Platform with Legs-Tracks-Wheels- AZIMUT addresses the challenge of making multiple mechanisms available for locomotion on the same robotic platform. AZIMUT has four independent articulations that can be wheels (as shown above), legs or tracks, or a combination of these. By changing the direction of its articulations, AZIMUT is also capable of moving sideways without changing its orientation, making it omnidirectional. All these capabilities provide the robot with the ability to move in tight areas. AZIMUT is designed to be highly modular, placing for instance the actuators in the articulations so that the wheels can be easily replaced by leg-track articulations for all-terrain operations. Stability and compliance of the platform are enhanced by adding a vertical suspension and using elastic actuators for the motorized direction of AZIMUT’s articulations. An elastic element is placed in the actuation mechanism and a sensor is used to measure its deformation, allowing to sense and control the torque at the actuator’s end. This should improve robot motion over uneven terrains, making the robot feel the surface on which it operates. Mechatronic modules, such as the wheel-motor that is used in all configurations, use a distributed processing architecture with multiple microcontrollers communicating through shared data buses. AZIMUT's design provides a rich framework to create a great variety of robots for indoor and outdoor uses.

CRI - Roball, Spherical Robot

Tito - Robot Interacting with Children with Autism- Considering making a robot that can move in a home environment, filled with all kinds of obstacles, requires particular locomotion capabilities. A mobile robotic toy for toddlers would have to move around other toys and objects, and be able to sustain rough interplay situations. Encapsulating the robot inside a sphere and using this sphere to make the robot move around in the environment is one solution. The robot, being spherical, can navigate smoothly through obstacles, and create simple and appealing interactions with toddlers. The encapsulating shell of the robot helps protect its fragile electronics. Roball's second prototype was specifically developed to be a toy and used to study interactions between a robot and toddlers using quantitative and qualitative evaluation techniques. Observations confirm that Roball's physical structure and locomotion dynamics generate interest and various interplay situations influenced by environmental settings and the child's personality. Roball is currently being used to see how child interaction can be perceived directly from onboard navigation sensors.

DEVICES AND TOOLS

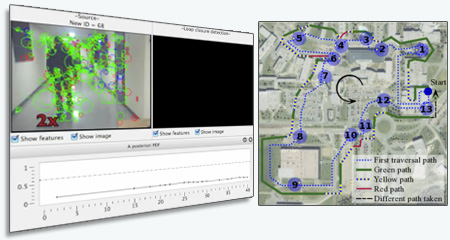

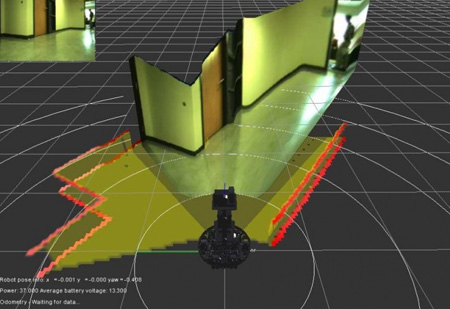

RTAB-Map

Real-Time Appearance-Based Mapping- Loop closure detection is the process involved when trying to find a match between the current and a previously visited locations in SLAM (Simultaneous Localization And Mapping). Over time, the amount of time required to process new observations increases with the size of the internal map, which may affect real-time processing. RTAB-Map is a novel real-time loop closure detection approach for large-scale and long-term SLAM. Our approach is based on efficient memory management to keep computation time for each new observation under a fixed time limit, thus respecting real-time limit for long-term operation. Results demonstrate the approach's adaptability and scalability using one custom data set and four standard data sets.

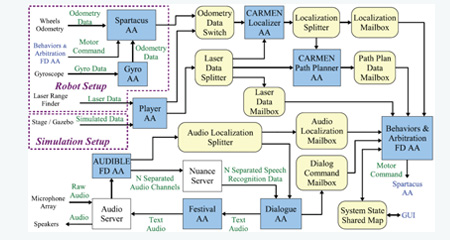

AUDIBLE

Sound Source Localization, Separation and Processing- Artificial auditory system that gives a robot the ability

to locate and track sounds, as well as the possibility of separating

simultaneous sound sources and recognising simultaneous speech. We

demonstrate that it is possible to implement these capabilities using an

array of microphones, without trying to imitate the human auditory system.

The sound source localisation and tracking algorithm uses a steered

beamformer to locate sources, which are then tracked using a multi-source

particle filter. Separation of simultaneous sound source is achieved using a

variant of the Geometric Source Separation (GSS) algorithm, combined with a

multi-source post-filter that further reduces noise, interference and

reverberation. Speech recognition is performed on separated sources, either

directly or by using Missing Feature Theory (MFT) to estimate the

reliability of the speech features. The results obtained show that it is

possible to track up to four simultaneous sound sources, even in noisy and

reverberant environments. Real-time control of the robot following a sound

source is also demonstrated. The sound source separation approach we propose

is able to achieve a 13.7 dB improvement in signal-to-noise ratio compared

to a single microphone when three speakers are present. In these conditions,

the system demonstrates more than 80% accuracy on digit recognition, higher

than most human listeners could obtain in our evaluation when recognising

only one of these sources. All these new capabilities make it possible for

humans to interact more naturally with a mobile robot in real life settings.

The Open Source implementation is called ManyEars and is available at : http://manyears.sourceforge.net

MARIE

Mobile Autonomous Robot Integrated Environment- MARIE is a design tool for mobile and autonomous robot

application, designed to facilitate the integration of multiple

heterogeneous software elements. It is a flexible tool based on a

distributed model, thus allowing the realization of an application using one

machine or various networked machines, architectures and platforms. It is

now replaced by ROS from Willow Garage.

Note : MARIE is no longer maintained.

FlowDesigner

Graphical Programming Environ. for Robots- FlowDesigner is a free (GPL/LGPL) data flow oriented

development environment. It can be used to build complex applications by

combining small, reusable building blocks. In some ways, it is similar to

both Simulink and LabView, but is hardly a clone of either. FlowDesigner

features a RAD GUI with a visual debugger. Although FlowDesigner can be used

as a rapid prototyping tool, it can still be used for building real-time

applications such as audio effects processing. Since FlowDesigner is not

really an interpreted language, it can be quite fast. It is written in C++

and features a plugin mechanism that allows plugins/toolboxes to be easiliy

added.

RobotFlow is a mobile robotics tookit based on the FlowDesigner project. The visual programming interface provided in the FlowDesigner project will help people to better visualize & understand what is really happening in the robot's control loops, sensors, actuators, by using graphical probes and debugging in real-time. Note : RobotFlow is no longer maintained.

OpenECoSys

- The Open Embedded Computing Systems (OpenECoSys) project consists in providing free of charge, open source hardware & software implementations for embedded computing devices. Initial projet was started from Université de Sherbrooke's IntRoLab - Intelligent / Interactive / Integrated / Interdisciplinary Robot Lab. Over time, IntRoLab developed multiple embedded modules for its own mobile robot platforms. All modules are connected through a shared CAN (Control Area Network) bus to form a distributed network of sensors and actuators that are used on advanced platforms such as the AZIMUT3 robot. Most of the embedded systems are based on Microchip micro-controllers that are inexpensive, powerful and versatile. Software tools, such as theNetworkViewer was developed to allow monitoring of multiple internal variable in the distributed network to facilitate the development of any application.

INTELLIGENT DECISION-MAKING

Autonomous Robot

Social, Intelligent and Autonomous Mobile Robot- The goal is to design the most advanced robots with the

highest integrated capabilities of perception, action , reasoning and

interaction, operating in natural settings. Integrate many research projects

on the same robotic platform:

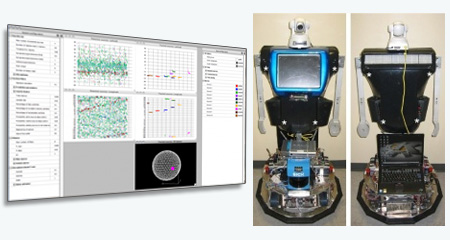

Johnny Jr, A Humanoid Robot

Johnny Jr is an interactive robot based on multiple projects (ADE, AUDIBLE, AZIMUT, HBBA). The robot has different sensors principally configured for human presence detection. For instance, we use :

- A laser range detector (Hokuyo UTM-30LX) for people's legs on 180 degrees

- A Microsoft Kinect that locates in 3D up to four people in front of the robot

- An head-mounted camera that detects faces

- An 8 microphones array that localizes sound sources

Johnny Jr can also interact with human in different manners, including:

- Voice

- Facial expressions

- Head movements

- Arm gestures

- Base mobility

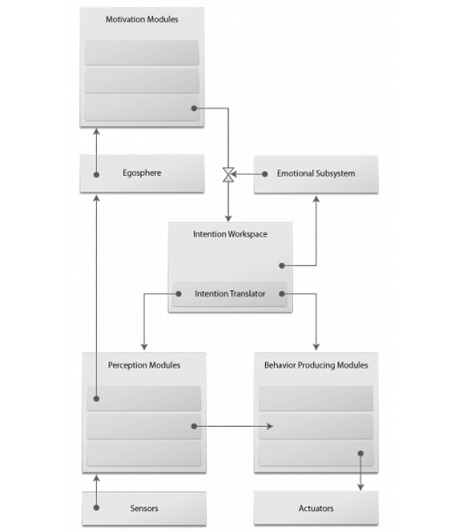

HBBA

Hybrid Behavior-Based Architecture- Our new Hybrid Behavior-Based Architecture (HBBA)

combines our latest research in autonomous mobile robots control

architectures and is the logical evolution of our Motivated Behavioral

Architecture (MBA). It currently powers the mind of our humanoid robot,

Johnny 0.

Our decisional structure remains heavily distributed. Motivation modules provides the system with high-level desires. The Intention Workspace acts as a blackboard - a sharing space for motivation modules to post their desires. The Intention Translator takes these desires and translate them into a tangible intention for the controlled robot. This means our motivation modules are loosely coupled with the actual robot, making them portable between different instances of our architecture. Selective attention mechanisms implemented within the translator try to make the most of our platforms' limited resources by selecting competing perceptual and behavioral strategies in line with the current situation. The Egosphere surveys perceptual events and builds a more manageable abstraction of every sensorial inputs provided by our hardware. The Emotional Subsystem regulates desires' intensities according to the simulated mood of the robot, which varies in relation to the current intention accomplishment.

Its current implementation is a set of reusable ROS packages, mostly written in C++.

DCD

Collaborative Driving Systems- To eventually have automated vehicles operate in platoons, it is necessary to study what information each vehicle must have and to whom it must communicate for safe and efficient maneuvering in all possible conditions. By emulating platoons using a group of mobile robots, we demonstrate the feasibility of maneuvers (such as entering, exiting and recuperating from an accident) using different distributed coordination strategies. The coordination strategies studied range from no communication to unidirectional or bidirectional exchanges between vehicles, and to fully centralized decision by the leading vehicle. Instead of assuming that the platoon leader or all vehicles globally monitor what is going on, only the vehicles involved in a particular maneuver are concerned, distributing decisions locally amongst the platoon. Experimental trials using robots having limited and directional perception of other, using vision and obstacle avoidance sensing were conducted. Results confirm the feasibility of the coordination strategies in different conditions, and various uses of communicated information to compensate for sensing limitations.

INTERFACES AND INTERACTION

TRInterface

Egocentric and Exocentric Teleoperation- Following the original Telerobot project, we began

development on a novel 3D interface for teleoperated navigation tasks.

This interface combines, in real time:

- An extrusion of SLAM-built 2D map of the environment

- A laser-based surface projection of a 2D video feed

- A 3D projection of a colored point cloud built from a stereoscopic camera

- A CAD-based 3D model of the robot

With this interface, the user can seamlessly transition from egocentric to exocentric viewpoints by moving the virtual camera around the controlled robot. As in modern 3D third-person videogames, the user is able to set its viewpoint to best suit the task at hand, like a top-down view to navigate tight spaces or a straight-ahead view to communicate with people.

Future work

A new, ROS-compatible and open source implementation that will take advantage of modern sensors like the Kinect is currently being worked on.

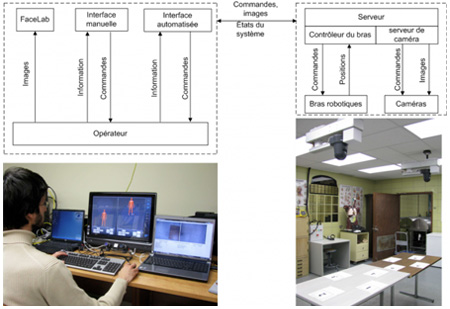

Teletrauma

Telecoaching in Emergency Rooms- Purpose: To compensate for the shortage of emergency

specialists and to maintain quality of medical care in remote regions.

Methods: Develop and validate the concept of real-time coaching using a robotized camera system ceiling-mounted above a stretcher. Exploit a final prototype to evaluate its impact on surgical procedures under controlled conditions.

Results : Preliminary results demonstrate: i) the system allows a remote practitioner to easily view all surgical procedures used in emergency

room, and ii) a specialist can coach a nonspecialist to perform a surgical procedure using proper kinesiologic gestual with many individuals surrounding the stretcher. Conclusion: The system could provide help on demand and improve the level of services in trauma medicine for the population of regions where trauma surgeons are unavailable. The system could also decrease medical cost by providing remote support on surgical procedures used to stabilize unstable polytraumatized patients at their arrival in the trauma room.

Telerobot

Mobile Robot for Home Telepresence- Telehealth assistive technologies for homes constitute a

very promising avenue to decrease load on the health care system, to reduce

hospitalization period and to improve quality of life. Teleoperated from

adistant location, a mobile robot can become a beneficial tool in health

applications. However, design issues related to such systems are broad and

mostly unexplored (e.g., locomotion and navigation in-home settings, remote

interaction and patient acceptability, evaluation of clinical needs and

their integration into health care information systems). Designing a safe

and effective robotic system for in-home teleassistance requires taking into

consideration the complexities of having novice users remotely navigate a

mobile robot in a home environment while they interact with patients.

Interdisciplinary and exploratory design methodology is adopted to develop a telepresence assistive mobile robot for homecare assistance of elderly people. Preliminary studies using robots, focus groups and interviews allowed us to derive preliminary specifications to design a new mobile robotic system named Telerobot. Telerobot’s locomotion mechanism provides improved mobility when moving on uneven surfaces, helping to provide stable video feed to the user. Its control system is implemented for safe teleoperation. A study involving 10 rehabilitation professionals confirms that the system is usable in home environments. Analysis of teleoperation strategies used by novice teleoperators suggest that it is essential in a home environment that the teleoperation interface provides the user with a visual feedback of the objects surrounding the robot, their distances relative to the robot and the size of the robot in the environment. Enhanced user interfaces to augment the operator’s perception of the environment were elaborated and tested in controlled conditions. These experiments are conducted with the objective of coming up with a complete, efficient and usable in-home teleassistance mobile robotic system.

CRI

Ecosystemic Studies of Child-Robot Interaction- Considering making a robot that can move in a home environment, filled with all kinds of obstacles, requires particular locomotion capabilities. A mobile robotic toy for toddlers would have to move around other toys and objects, and be able to sustain rough interplay situations. Encapsulating the robot inside a sphere and using this sphere to make the robot move around in the environment is one solution. The robot, being spherical, can navigate smoothly through obstacles, and create simple and appealing interactions with toddlers. The encapsulating shell of the robot helps protect its fragile electronics. Roball's second prototype was specifically developed to be a toy and used to study interactions between a robot and toddlers using quantitative and qualitative evaluation techniques. Observations confirm that Roball's physical structure and locomotion dynamics generate interest and various interplay situations influenced by environmental settings and the child's personality. Roball is currently being used to see how child interaction can be perceived directly from onboard navigation sensors.