Boston University

- Offer Profile

- Computer Science

Department

Boston University

Product Portfolio

Human Motion and Gesture Analysis

Computer-Human Interaction and Assistive Technology

- This ongoing project focuses on video-based human-computer interaction systems for people who need assistive technology for rehabilitation or as a means to communicate. Our first system, the "Camera Mouse," provides computer access by tracking the user's movements with a video camera and translating them into the movements of the mouse pointer on the screen. The system has been commercialized and is in wide use in homes, hospitals, and schools the U.S.and the U.K. Other systems detect the user's eye blinks or raised fingers and interpret the communication intent.

Human Pose Estimation (Current)

- The goal of this effort is to develop algorithms for articulated structure and motion estimation, given one or more image sequences. Articulated motion is exhibited by jointed structures like the human body and hands, as well as linkages more generally. Articulated structure and motion estimation algorithms are being developed that can automatically initialize themselves, estimate multiple plausible interpretations along with their likelihood, and provide reliable performance over extended sequences. To achieve these objectives, concepts from statistical machine learning, graphical models, multiple view geometry, and structure from motion are employed.

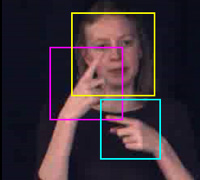

Gesture Analysis and Recognition (Current)

- The aim of this project is to develop techniques for automatic analysis and recognition of human gestural communication. The complexity of simultaneous expression of linguistic information on the hands, the face, and the upper body creates special challenges for computer-based recognition. Results of this effort include algorithms for: localizing and tracking human hands, estimating hand pose and upper body pose, tracking and classifying head motions, and analysis of eye and facial gestures. Algorithms are also being developed for efficiently spotting and recognizing specific gestures of interest in video streams.

Large Lexicon Gesture Representation, Recognition, and Retrieval (Current)

- his project involves research on computer-based recognition of ASL signs. One goal is development of a "look-up" capability for use as part of an interface with a multi-media sign language dictionary. The proposed system will enable a signer either to select a video clip corresponding to an unknown sign, or to produce a sign in front of a camera, for look-up. The computer will then find the best match(es) from its inventory of thousands of ASL signs. Knowledge about linguistic constraints of sign production will be used to improve recognition. Fundamental theoretical challenges include the large scale of the learning task (thousands of different sign classes), the availability of very few training examples per class, and the need for efficient retrieval of gesture/motion patterns in a large database.

Image and Video Databases

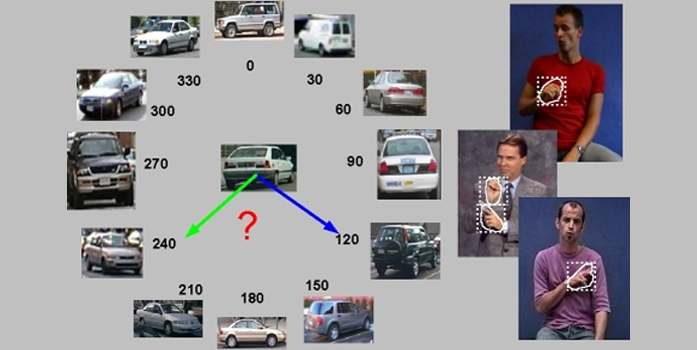

Motion-based Retrieval and Motion-based Data Mining (Current)

- The aim of this project is to develop methods for indexing, retrieval, and data mining of motion trajectories in video databases. Computer vision techniques are being devised for detection and tracking of moving objects, as well as estimation of statistical time-series models that describe each object's motion, that can be used in motion-based indexing and retrieval. Algorithms are being developed that can discover clusters and other patterns in the extracted motion time-series data, and to identify common versus unusual motion patterns.

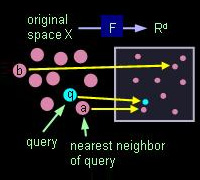

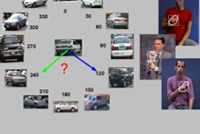

Retrieval and Classification Methods (Current)

- The goal of this project is to develop scalable classification methods that can exploit the information available in large databases of training data. Given an object to classify, one important problem is how to correctly identify the most similar objects in the database. An equally important problem is how to retrieve those objects efficiently, despite having to search a very large space. Results of this effort include algorithms for fast nearest neighbor retrieval under computationally expensive distance measures, optimizing the accuracy of nearest neighbor classifiers, and designing query-sensitive distance measures that automatically identify, in high-dimensional spaces, the dimensions that are the most informative for each query object.

Content-based Retrieval of Images on the World Wide Web (Past)

- The goal of this project is to develop algorithms for searching web for images. Visual cues (extracted from the image) and textual cues (extracted from the HTML document containing the image) can be exploited. The technical challenges associated with the project are to deal with the staggering scale of the world wide web, to formulate effective image representations and indexing strategies for very fast search based on image content, and to develop user interface techniques that make image search fast, intuitive, and accurate. These algorithms have been deployed in the ImageRover system.

Medical Image Analysis

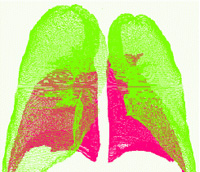

Segmentation of Anatomic Structures (Current)

- This ongoing project aims at developing automatic or semi-automatic methods for localizing and outlining anatomic structures in 2D and 3D data. This includes x-rays, computed tomography (CT) scans and magnetic resonance images (MRI). Our work has focused on structures of the chest, in particular, lungs, ribs, trachea, pulmonary fissures, pulmonary nodules, and blood vessels. A pulmonary fissure is a boundary between the lobes in the lungs. Our fissure segmentation method is based on an iterative, curve-growing process that adaptively weights local image information and prior knowledge of the shape of the fissure.

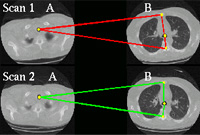

Detection and Classification in Medical Images (Current)

- We have developed methods for automatically detecting and measuring pulmonary nodule growth. These growth measurements are essential for lung cancer screening but are currently made by time-consuming, inaccurate and inconsistent manual methods. Facilitating the diagnosis of lung cancer is important, because early detection and resection of small, growing, pulmonary nodules can improve the 5-year survival rate of patients from 15% to 67%

Registration of Anatomical Structures (Current)

- In this ongoing project, we develop methods to align anatomical structures in medical image data sets. We have focused on registering structures in the chest, such as lung surfaces and pulmonary nodules. Our approaches use rigid- and deformable-body transformations.

Multicamera Vision Systems

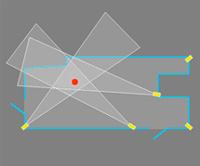

Placement and Control of Cameras in Video Sensor Networks (Current)

- The goal of this project is to develop methods for determing the optimal position and choice of video cameras to cover a given area and to serve specific vision task(s), and algorithms for prediction, camera control, and scheduling of computer vision tasks within a networked collection of video cameras. A predictive framework is being developed that can accrue a statistical model of temporal associations between events of interest observed within a sensor network. Finally, algorithms are being formulated that can exploit the statistical models in scheduling sensor network resources to accomplish certain tasks, like tracking objects of interest, or identifying all individuals.

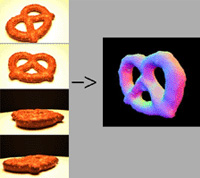

Shape and Motion Estimation from Multiple Views (Current)

- The goal of this project is to automatically construct detailed 3D models of objects given multiple views. In one family of approaches developed in this project, the aim has been to reconstruct a 3D polygonal mesh model and color texture map from multiple views of an object. Efforts have also focused on the problem of estimating an object's 3D motion field (scene flow) from multiple video streams. These methods explicitly account for uncertainties of the measurements as they affect the accuracy of the recovered model.

Object Recognition

Detector Families for Detection, Parameter Estimation and Tracking (Current)

- The main goal of this project is to develop algorithms for simultaneous detection, parameter estimation, and tracking of objects that exhibit high variability. The project focus is on three areas: (1) methods for dimensionality reduction that incorporate knowledge of object dynamics, (2) models that combine a collection of simpler local models to efficiently and accurately approximate nonlinear motion dynamics in a state-based model for tracking, (3) algorithms that can detect an instance of the object class in the image, and at the same time estimate the object's parameters.

Shape-based Segmentation, Description, and Retrieval (Current)

- The goal of this project is to develop automated methods for detecting, describing, and indexing shapes that appear in image and video databases. Retrieval by shape is perhaps one of the most challenging aspects of content-based image database search, due to image clutter, segmentation errors, etc. In addition, many shape classes of interest are related through deformations and/or may have variable structure. Methods are being developed that can detect, segment, and describe shapes in images despite clutter, shape deformation, and variable object structure.

Tracking

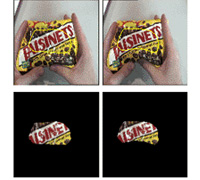

Region-based Deformable Appearance Models (Past)

- The aim of this project is to develop methods for tracking deforming objects. A mesh model is used to model the object's shape and deformations, and a color texture map is used to model the object's color appearance. Photometric variations are also modeled. Nonrigid shape registration and motion tracking are achieved by posing the problem in terms of an energy-based, robust minimization procedure, which provides robustness to occlusions, wrinkles, shadows, and specular highlights. The algorithms run at frame-rate, and are tailored to take advantage of texture mapping hardware available in many workstations, PC's, and game consoles. The Active Blobs framework is one result of this effort.

Layered Graphical Models for Tracking (Current)

- Partial occlusions are commonplace in a variety of real world computer vision applications: surveillance, intelligent environments, assistive robotics, autonomous navigation, etc. While occlusion handling methods have been proposed, most methods tend to break down when confronted with numerous occluders in a scene. In this project, we are developing layered image-plane representations for tracking through substantial occlusions. An image-plane representation of motion around an object is associated with a pre-computed graphical model, which can be instantiated efficiently during online tracking.

Video-based Analysis of Animal Behavior

Infrared Thermal Video Analysis of Bats

- We have used an infrared thermal cameras to record Brazilian free-tailed bats in California, Massachusetts,and Texas and developed automated image analysis methods that detect, track, and count emerging bats. Censusing natural populations of bats is important for understanding the ecological and economic impact of these animals on terrestrial ecosystems. Colonies of Brazilian free-tailed bats are of particular interest because they represent some of the largest aggregations of mammals known to mankind. It is challenging to census these bats accurately, since they emerge in large numbers at night.